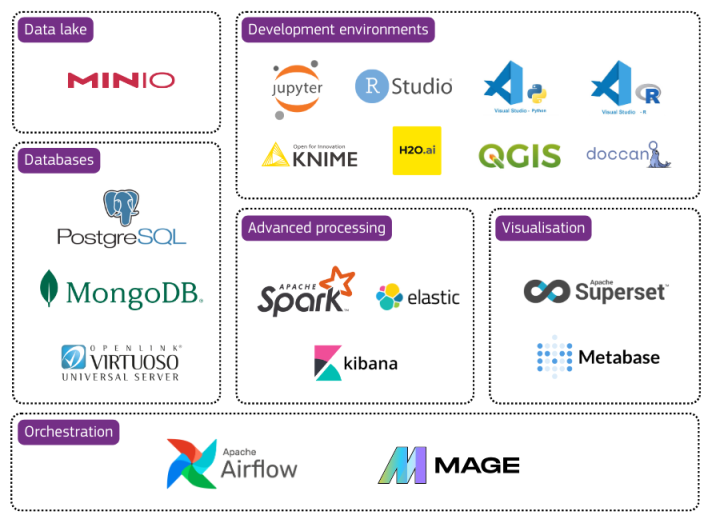

Databases

Database solutions are made available to store data and perform queries on the stored data. BDTI currently includes a relational database (PostgreSQL), a document-oriented database (MongoDB) and a graph database (Virtuoso).

PostgreSQL

A PostgreSQL relational database is available to store data in a pre-defined structured format such that this data is made available for querying and analysis. PostGIS Extension was added in the latest version

MongoDB

MongoDB, a document-oriented database, is available for storing JSON-like documents with optional schemas. MongoDB is a distributed database at its core, so it is high availability, scales horizontally, and geographic distribution are built in and easy to use.

Virtuoso

Virtuoso, a graph database, is available and can be used to collect data of every type, wherever it lives and use hyperlinks as super-keys for creating a change-sensitive and conceptually flexible web of linked data.

Data lake

A data lake solution is available to store large amounts of structured and raw unstructured data. The raw unstructured data can be further processed by deployed configurations of other building blocks (BDTI components) and next be stored in a more structured format within the data lake solution.

MinIO

MinIO, a high-performance Kubernetes native object storage, is made available so users can store unstructured data such as photos, videos, log files, and backups.

Development environments

Development environments provide the computing capabilities and the tools required to perform standard data analytics activities on data from external data sources such as data lakes and databases.

JupyterLab

JupyterLab is a web-based interactive development environment for Jupyter notebooks, code, and data.

Rstudio

RStudio is an Integrated Development Environment (IDE) for R, a programming language for statistical computing and graphics.

KNIME

KNIME is an open-source data analytics, reporting, and integration platform. It integrates various machine learning and data mining components and can be used throughout the data science life cycle.

H2O.ai

H2O.ai is an open-source machine learning and artificial intelligence platform designed to simplify and accelerate making, operating and innovating with ML and AI in any environment.

VS Code - Python

The VS Code Python extension enhances Visual Studio Code with robust support for Python development, providing features such as IntelliSense, debugging, linting, Jupyter Notebook integration, and more.

VS Code - R

The VS Code R extension enhances Visual Studio Code with robust support for R programming, offering features like syntax highlighting, debugging, linting, R Markdown integration, and more.

QGIS

QGIS is an open-source Geographic Information System that enables users to create, edit, visualise, analyse, and publish geospatial information on Windows, Mac, and Linux platforms.

Doccano

Doccano is an open-source annotation tool for text data. It is designed to facilitate the creation of labelled datasets for natural language processing (NLP) tasks and provides a web-based interface for annotating text.

Advanced processing

Clusters and tools can be set up for processing large volumes of data and performing real-time search operatons.

Apache Spark

Apache Spark is available and can be used to implement Spark clusters, which are compute clusters with big data applications that can perform distributed processing of large volumes of data.

Elasticsearch

Elasticsearch is a distributed search and analytics engine that performs real-time searches for a wide variety of use cases, such as storing and analysing logs, managing and integrating spatial information, and more.

Kibana

Kibana is your window into the Elastic stack. Specifically, it is a browser-based analytics and search dashboard for Elasticsearch.

Visualisation

A data visualisation application is available for representing information in the form of common graphics such as charts, diagrams, plots, infographics, and even animations.

Apache Superset

Apache Superset is an open-source, modern data exploration and visualisation platform that can handle data at a petabyte scale (big data).

Metabase

Metabase sets up in five minutes, connects to your database, and brings its data to life in beautiful visualisations. An intuitive interface makes data exploration feel like second nature, opening data up for everyone, not just analysts and developers.

Orchestration

A data orchestration application is made available for automating data-driven processes from end to end, including preparing data, making decisions based on that data, and taking actions based on those decisions. The data orchestration process can span many different systems and types of data.

Apache Airflow

Apache Airflow is an open-source workflow management platform that allows you to schedule and run complex data pipelines easily.

Mage

Mage is an open-source tool designed as a modern alternative to Airflow for building, running, and managing data pipelines. It facilitates data integration and transformation by allowing users to develop Python, SQL, or R pipelines.